COGNITIVE SCIENCE AND HUMAN COMPUTER INTERACTION

UCSD has spearheaded the field of cognitive science, an interdisciplinary field that seeks to integrate the knowledge of psychology, neuroscience, and computation. It offers a number of specializations, including Human-Computer Interaction (HCI), in which I got a Bachelor's of Science in 2013. This specialization taught me how to apply my knowledge of human thought and behavior to technology, and showed me techniques for translating my insights into tangible, human-centered designs. I acquired this knowledge through applied, project-based courses, and by working in research labs.

In this section, I have included the purpose, process, and results of two of the project-based courses and two of the research labs that have taught me the skills that I continue to use in my work today.

Cognitive Design Studio (COGS 102c)

This course was a hands-on introduction to the world of rapid contextual design, according to Rapid Contextual Design: A How-to Guide to Key Techniques for User-Centered Design, a textbook by Holtzblatt, Burns Wendell, and Wood. We applied our acquired design techniques to develop our project from inception to high fidelity prototype. We assembled a team of 8 people known as the Mobile App Redesign Squad, as our project of choice was to revamp UCSD's mobile app. I acted as group leader, helping to organize meetings, facilitate discussion, write on the whiteboard, and maintain the project focus. At the end of the course, we presented our deliverables and results to the developers of the existing UCSD mobile app for their consideration.

Step 1: Initial user interviews

Once we had decided on a project, we met to decide what users we were going to interview and the kinds of questions we would ask them. We set up a number of interviews with a wide variety of user demographics: current students of all years, transfer students, grad students, professors, staff, and incoming students. We had ~60 interviews in total. We even set up a meeting with the existing app's designers in order to get their perspective on design decisions.

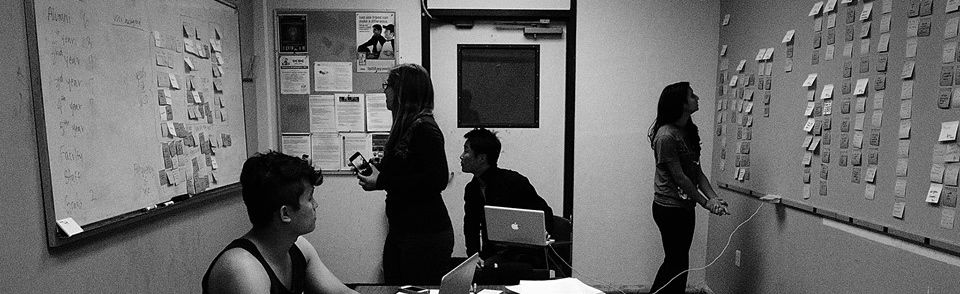

STEP 2: 1ST ROUND AFFINITY DIAGRAM

Once we had a mass of data, we attempted to visualize it using an affinity diagram. We established 3 meta-groups (demographic information, functionality, and aesthetics), and then placed post-its as we saw fit into each of the categories. Once we had our notes sorted into thirds, we categorized them into many smaller categories based on what themes emerged.

STEP 3: NARROWING OUR FOCUS

After examining our affinity, we came to three conclusions: we needed more data to fill in some gaps, we needed to do our interviews more contextually, and we needed to narrow our scope. We came back with more data and reexamined the affinity, and after reorganizing some categories, a major theme emerged. We decided that the purpose of our redesign was to center the information architecture of the app around goals that real users had, instead of disperse school departments. We also decided to only focus on current students.

STEP 4: 2ND ROUND AFFINITY DIAGRAM

Once we had established our perspective, we gathered more data from current students only, prompting them with goal-driven questions. We came back with our new data and created a second affinity diagram.

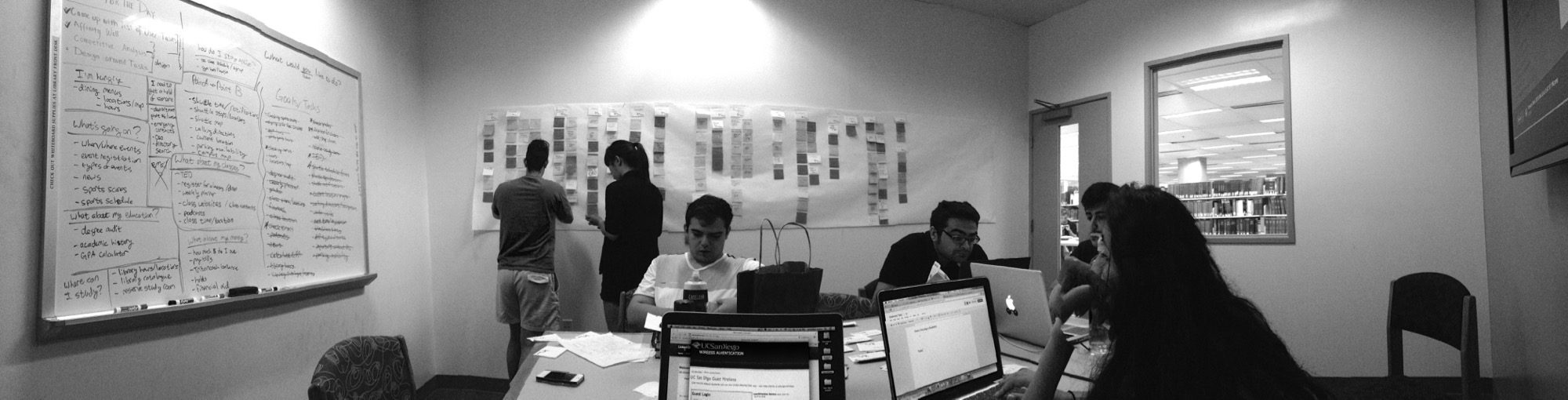

STEP 5: CONSOLIDATING SEQUENCES

The next step was combining our two affinity diagrams into one master, and seeing what patterns emerged. Once we had established categories, we started grouping related titles on a whiteboard into action sequence groups. Once those sequences were established, we gave them goal related titles from the user's perspective (e.g. "I need to sign up for classes.").

STEP 6: COMPETITIVE ANALYSIS

To make a lateral move in an attempt to gather more data, we performed a competitive analysis. Each member of our group downloaded another major university's app and explored it, making note of what they liked and didn't like. We combined our results, and used this to influence the visual design and feature list of our app.

STEP 7: PERSONA DEVELOPMENT

After all the data collection, we had a firm grasp of who our users were, and wanted to personify them. Within the current student demographic, we found variation on two dimensions: experience with college experience and exposure to our particular university. Multiplying these, we got four user groups: freshmen, seniors, first year transfers, and experienced transfers. With this established, we divided up our list of user goals amongst the personas and wrote user stories to support them.

STEP 8: VISIONING AND SEQUENCE MODELS

One our stories were written, we needed to start making our action sequences more specific to the app. We created a number of conceptual flows of varying fidelity, from the thought process that the user would have while trying to achieve the goal, to the specific hierarchy the app would need to have in order to support that flow.

STEP 9: LOW FIDELITY WIREFRAMES

Once these specific workflows were decided on, we began to prototype. We decided to approach it hierarchically to ensure the greatest number if ideas would we heard. Each individual member of the group mocked up a few main screens on paper, then we split into two groups to discuss everyone's ideas and narrow them down to two. We then mocked up the combined prototypes.

STEP 10: USER INTERVIEWS WITH PROTOTYPES

While still in two separate groups, we went out and did user interviews with the prototypes, so we would have some real data to support our design decisions when we came back together as a group. We got a lot of support for both designs, so we also did some A/B testing and google surveys to settle the last of our decisions.

STEP 11: PROTOTYPE ITERATION

After the two groups had come back together and decided on a design, we moved on with our next iteration. We took advantage of a fantastic app called Prototyping on Paper (POP) which allowed us to create a prototype that is low in fidelity but high in functionality. It allowed us to sketch pictures of each page of our app on paper, take a picture of each page, and hyperlink everything together. We went back and did one more round of interviews with the POP prototype before we finalized our design.

STEP 12: HIGH FIDELITY PROTOTYPE

Finally, we created high-fidelity prototypes in Omnigraffle to mock up our final design. The short length of the class prohibited us from actually developing the app, but we were still able to get the look of the final product in Omnigraffle, and the feel of it in POP.

FINAL PRODUCTS: PRESENTATION AND PAPER

These are our final deliverables. We gave a presentation to the class detailing our process, as well as enumerating some users' needs and how our app responded to them. For the final paper, we collaborated on a document that described all of the actions we took over the course of the project, and the implications for our design.

Professional Web Design (COGS 187B)

This course entailed finding a real-world client and creating a functional website for them from scratch. This immersive course taught us both the theoretical knowledge of heuristic analyses and information architecture, as well as the practical skills that come with dealing with an actual client. Between the three members of my group, we collaborated and shared the tasks of designing and developing the website. We took on the challenge of building a website for a local stair craftsman. Long after the end of the course, the site is live and aiding the business.

Step 1: Initial requirements gathering

Once we had met with our client and decided to do business together, we met with them to discuss their goals for the project and their expectations for us. We discussed constraints and wishers that they had, as well as the yield they expected for their business as a result of our help. We then drew up a contract making all of our agreements explicit so we would have a resource in the event of misunderstandings down the road.

Step 2: User interviews and personas

We met with the client to discover what they perceived their target customers to be. Once that was established, my team went out and found real users and interviewed them about their needs and perspectives in relation to our client's business. We then consolidated our notes and used them to create personas so we would have concrete (although fictional) users to point to as we were designing our website for them.

Step 3: Competitive analysis

To gain some breadth of understanding, we looked at the websites of our client's closest competitors. We took notes and screenshots, and annotated each screenshot with details of what was and was not user friendly.

Step 4: Creative brief

Since we had had an opportunity to collect a fair amount of data, we compiled it into a progress report for our client. We reported all our data as well as our interpretation of it, and discussed how we planned to move forward with it in our design for their website.

Step 5: Low fidelity wireframes and storyboards

Once we had clarified our creative goals, we began to work visually on the website. We sketched low-fidelity wireframes, and annotated them to highlight important features. We also annotated them describing how the user would go about interacting with each feature.

Step 6: Content inventory

Before we got down to the business of developing the website, we referenced our storyboards to make a content inventory of all of the features we wanted. We described all of the features, as well as all of the copy that needed to be written and other content that needed to be generated or acquired. We created a checklist, and divided the labor to ensure everything was completed.

Step 7: High fidelity wireframe

While one of my teammates was acquiring content and the other was waiting to start coding, I mocked up some high fidelity wireframes in Photoshop. Although I was the one that executed the wireframes, the process of designing them was highly collaborative between my teammates and I.

Step 8: The final, live product

After the high fidelity images were set, it was just a matter of translating it into code. We collaborated to discuss what kind of interactivity we wanted the features to have, and checked each other constantly to ensure that we were abiding by usability heuristics, and staying congruent with the data. After the class was finished, the site went live, and has been highly complimented by clients and customers ever since.

Distributed Cognition HCI Lab

The Distributed Cognition and Human-Computer Interaction (DCogHCI) Lab is a research lab at UCSD that also uses cognitive ethnography in order to study the interactions between agents, their environments, and technology. This can then be used to redesign the technology and environments so that they fit with agents' behavior and cognition in a more compatible way. I contributed to two papers while working in this lab, both concerning medical exam rooms.

For my research, my colleagues and I collected multimodal data from a local clinic and studied the interactions between the physician and the patient, and noted the changes in the dynamics when the patients required a translator to communicate. We focused our attention on the patterns of interaction that occurred between the people and the environment, modeling the affordances and limitations of the electronic medical records, paper records and drawings, and the seating arrangement. The these conclusions were used to suggest implications of redesigning the exam room to better facilitate communication and improve patient understanding. My team had a paper accepted to the Pervasive Health conference, 2012, to which I have included a link.

Nadir Weibel, Colleen Emmenegger, Jennifer Lyons, Ram Dixit, Linda L. Hill, and James D. Hollan. Interpreter-Mediated Physician-Patient Communication: Opportunities for Multimodal Healthcare Interfaces, In Proceedings of PervasiveHealth 2013, International Conference on Pervasive Computing Technologies for Healthcare, Venice, Italy, May 2013.

My fellow research assistant and I recently attended Human Computer Interaction International (HCII) conference in Las Vegas July, 2013. We presented our poster based off of the same data as the Pervasive Health paper, though with a more HCI-driven perspective. I was responsible for roughly half the written work of the abstract, as well as collaborating on the design of the poster.

Jennifer Lyons, Ram Dixit, Colleen Emmenegger, Nadir Weibel, and James D. Hollan. Factors affecting physician-patient communication in the medical exam room, In Proceedings of HCI International 2013, 15th International Conference on Human-Computer Interaction (Abstracts)

Aviation Lab

In 2011, I joined an ethnography lab on campus the did research on airline cockpits for Boeing. We collected multimodal data from simulators in Boeing headquarters with the goal of mapping pilots' attention as they interacted with the instruments and artifacts in the cockpit. The data was integrated in an application known as Chronoviz where research assistants like myself could aid in the visualization and annotation of the data.

Here I have included a technical report given to Boeing summing up our research for the year 2011. I was involved in transcribing the audio data that was collected. I also assisted in the annotating of the data, and the eventual analysis. I also made written contributions to the technical report itself. I have also included an image of a conceptual model I drew during the data visualization process of possible interactions within the flight deck system.

Emmenegger, Colleen, Nadir Weibel, Adam Fouse, Whitney Friedman, Lara Cheng, Sara Kimmich, Jennifer Lyons, and Edwin Hutchins. Accelerating the Analysis of Flight Deck Activity: Final Report of UCSD-Boeing Project Agreement 2011-2012. Tech. N.p.: n.p., n.d. Print.